Software architecture and natural inspiration

Computer science is a relatively young discipline but it is based on logic and a good design is based on mathematical theories even if that's not immediately obvious while developing it. Some languages are based entirely on mathematical ideas, SQL is based entirely on set theory, but ultimately every computer operation has to come down to some mathematical operation that can be determined on a circuit.

It can be even more esoteric; when a developer takes a list of numbers and does something as simple as converting it to a list of strings like so

List<int> numbers = [ 1, 2, 3, 4, 5 ];

List<string> strings = numbers.Select(i => i.ToString());

they may not realise it but they are applying a function to a functor (which in this case also happens to be a monad) at that point. If the last part of that sentence meant nothing to you that's perfectly fine, it doesn't have to.

Mathematics is the language of science and nature, we can use it to mimic what nature has already built to improve our own creations in a process called biomimicry. From the Eastgate Centre in Harare, Zimbabwe that is heated and cooled on the same air flow techniques as a termite nest to the B-2 stealth bomber having the same outline as a peregrine falcon in flight, there are always examples where evolution has found the most efficient solution. After all, it's had a few billion years head start on us.

An incredible example of this is using slime mold to template urban design. By modelling population centres in a Petri dish with nutrient samples and introducing a slime mold it grows a network that can be used to plan road networks linking those population centres in the most efficient ways.

But what does biomimicry have to do with computer science, specifically software architecture? After all there aren't many examples of computers in biology....well, except the brain.

There are many different models of how the human brain works, none of which are accurately able to describe how it works perfectly for one simple reason.

If the human brain were so simple that we could understand it, we would be so simple that we couldn’t.

-- Emerson M. Pugh

In the 1960's the Triune brain model was first described by a neuroscientist named Paul D. MacLean.

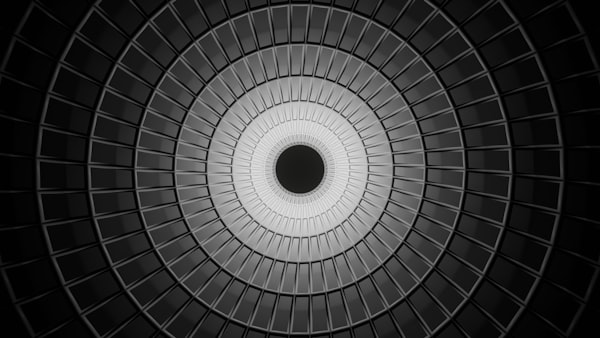

Although it has largely fallen out of favour within neuroscience I do know something about software architecture and I recognise a familiar pattern with Onion Architecture, also known as hexagonal architecture or ports-and-adapters.

The human brain is obviously a lot more complex than any software any human has ever designed or written, and even knowing that the Triune model has its flaws I can't help but think about the similarities in how each are modelled.

Each contains a central kernel that operates and controls the basic requirements, with extra layers that define new things, dependent on what is underneath but that have no dependency on the layer above, that could work without any problem if the layers above were removed or did not exist at all.

The brain is able to multitask. While you are reading this your brain is also constantly sending signals to regulate your heartbeat, control hormone levels and check how the digestive system is doing. Some of that background information may feed back and become something the brain then needs to actively work on. Your brain checks how full your stomach is and suddenly you find yourself wondering what you're going to have for dinner later today.

Similarly a large application may have foreground tasks like actions performed by a user and background work like scheduled tasks that manage data or perform health checks on various systems.

The human brain receives information from the outside via multiple senses, an application receives information via an API or User Interface. That information is processed; the brain analyses incoming information for a number of reasons simultaneously. It could be to listen to someone speaking, but the brain will still be immediately drawn to any unexpected sounds like a baby crying, a knock on the door if we're expecting a caller, or a lion roaring (a lot of our subconscious thinking is still rooted in instinct after all).

Meanwhile an application could process incoming data from multiple API endpoints or users simultaneously. That data may be actioned, causing some other function to be called or some data to be written to a database. An error may occur that would cause a function to exit immediately and return a message to the user who triggered it, just like when the brain hears one of those unexpected sounds and you immediately stop the conversation to determine what that sound was.

This is where code in an application differs from the brain. The brain draws attention away smoothly. After you realise that loud sound was just your neighbour slamming their door closed again you return to the conversation you were having. You may not be able to pick up exactly where you were but unlike a poorly written computer program your brain hasn't left itself in an invalid state with no way to recover. A computer program can crash and restart, the brain doesn't work that way.

Software architecture has its roots in the works of Dijkstra, only really existing as a concept from the late 1960's onwards.

In that time we've made great strides, moving from a single function that did everything to separating different parts into modules of code to layered architecture and onion architecture. We have even had entire shifts in the way we think about updating data, from mutating a single object representing some item to applying a history of events to that original item's state to track how it changes over time.

So perhaps we can build more reliable and fault-tolerant applications by modelling it on how the most complex system we know of works. Perhaps a new approach for an architecture could be modelled on how the brain works, not just on a biological and chemical level but also a psychological level. Just as there are lessons to be applied to aerodynamics from the shape of a birds wing there are lessons we can learn from the shape of the human mind.

An application may not have an ego, an id or a subconscious but it would be foolish to think that there is nothing in neuroscience or psychology we can draw inspiration from. After all, evolution has already shown us an incredible example of something that can run a program. Every line of code ever written first ran in the mind of the person who wrote it, so why not model an application on the environment it first ran in?